This blog post was written in collaboration with Ofcom’s Behavioural Insight Hub.

Being online opens a world of opportunity. But it also risks exposure to harm. Almost 3 in 10 adults (27%) say they have recently been exposed to potentially harmful content on social media.

One response is to allow people to control their content, typically giving them a choice between seeing ‘All content’ or ‘Reduced sensitive content’ on their social media feeds. But only 26% of people say they use these content controls. Lack of awareness is one of the issues but how platforms design their tools may create barriers as well. For example, defaults that don’t match users’ needs, a complicated layout, and technical jargon can make it hard for users to interact with online controls.

To examine how the design of platforms might help – or hinder – people from making informed choices about the content they see, BIT and Ofcom ran two trials.

We tested the impact on people’s behaviour of how information on content controls was presented to them, and how they were prompted to check their content controls. We aimed to motivate people to make an informed choice that reflected their preferences, without steering them towards either option.

What could be driving low engagement with these choices?

One potential reason is that people may not understand what’s meant by ‘sensitive content’. People may also not pay attention to the information since they just want to get to the feed.

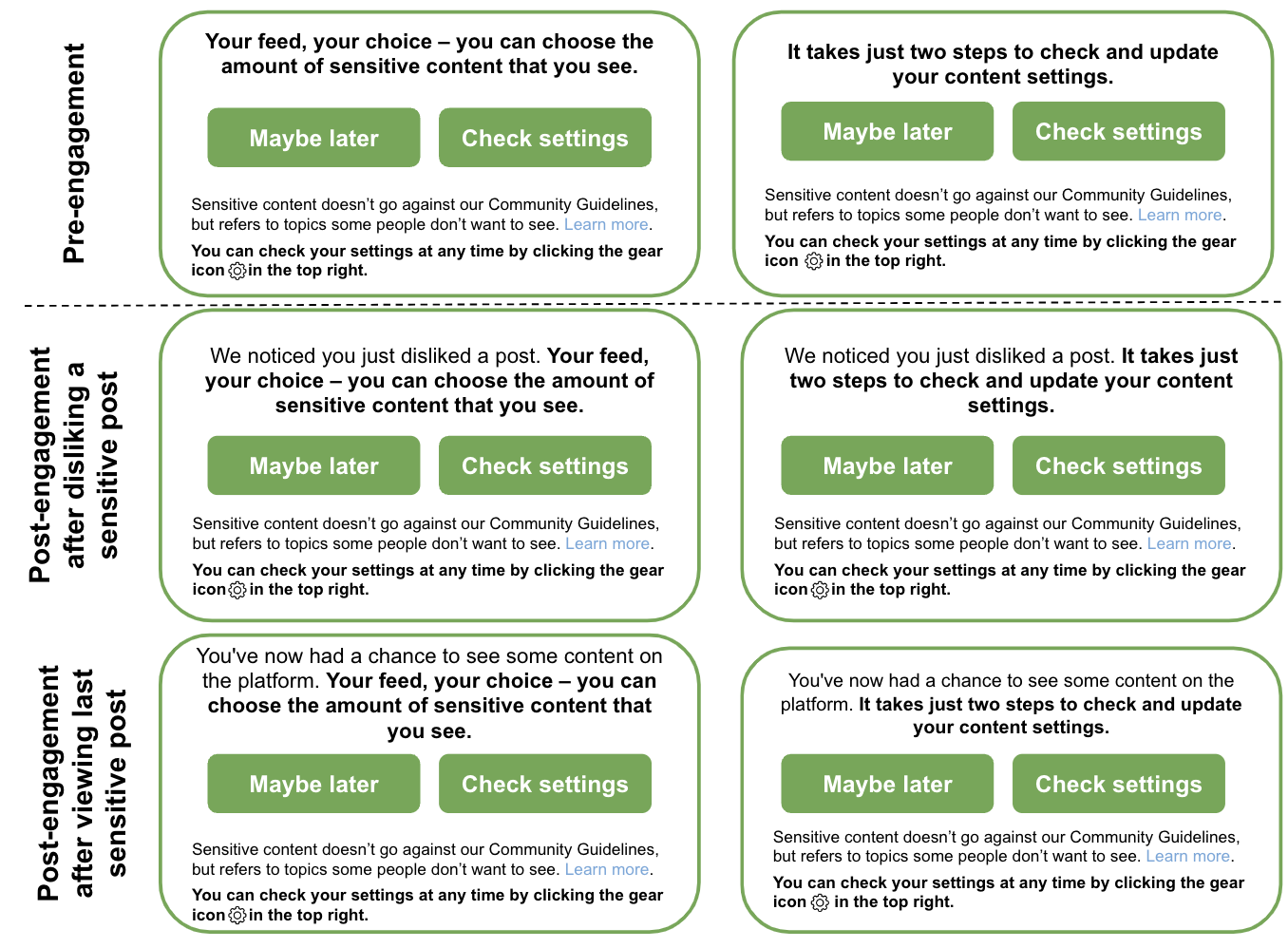

In our Sign-up trial, we designed interventions to inform people about what ‘sensitive content’ is in a short and easy to notice and follow manner. This included removing the extra click to see the examples (‘Info saliency’) and presenting the information via a short step-by-step tutorial (‘Microtutorials’) where people were forced to pause on each piece of information. We also varied which option (if any) was preselected. Users made a choice at sign-up and then browsed a feed. After a period of browsing, users were asked to review their settings, where we recorded whether they changed their initial choice. *

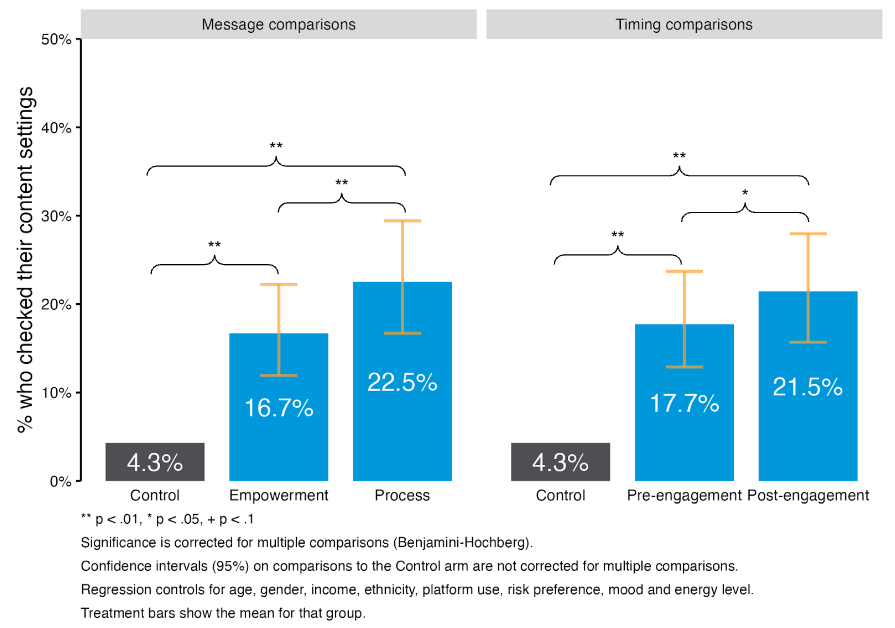

In our Check-and-update trial, we instead offered different prompts, in the form of pop-ups during a browsing session, to see whether the timing of the prompt and the framing of the prompt could increase motivation to check their setting.

Sign-up trial: Making an active, informed choice at sign-up

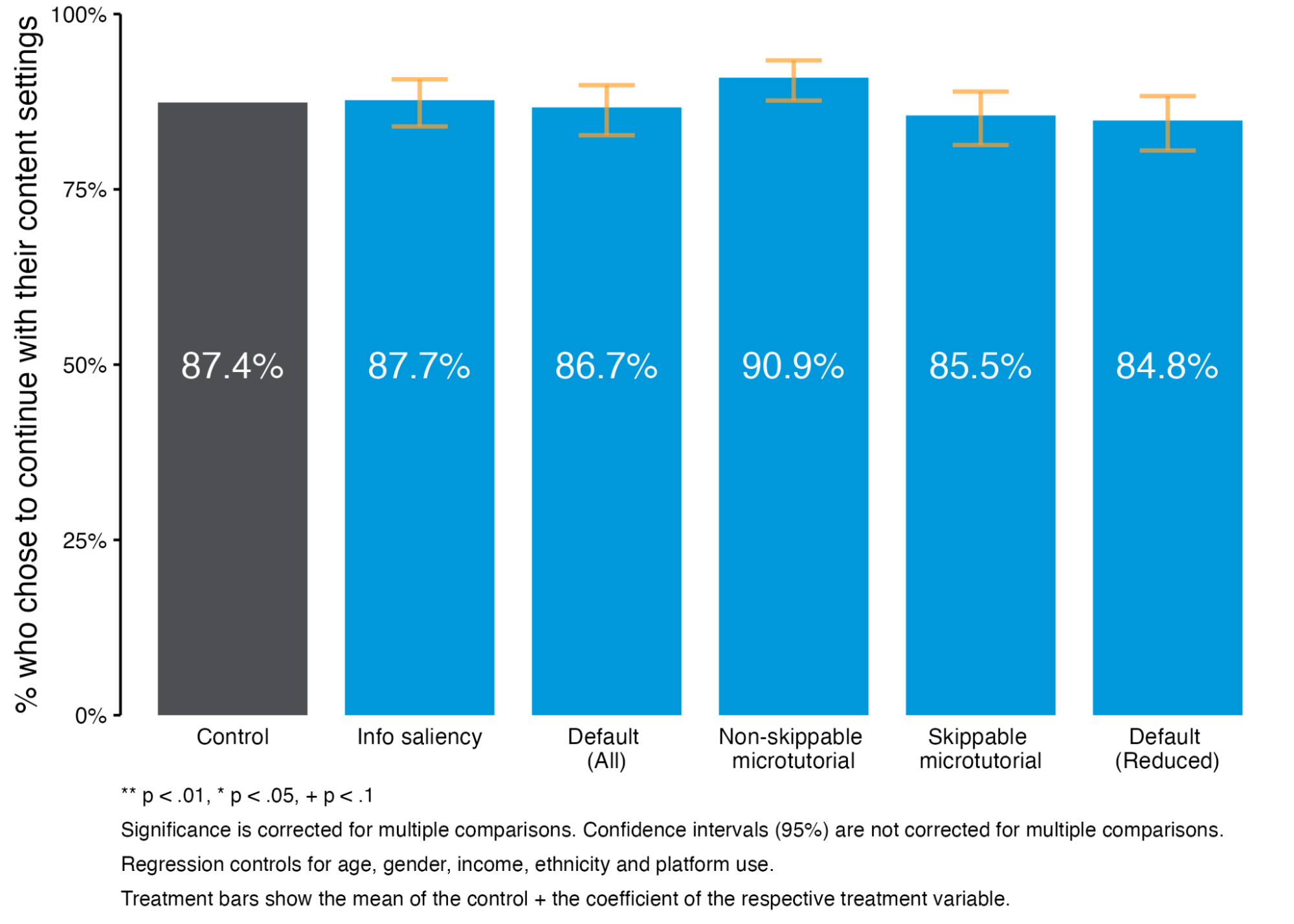

When people were asked to make an active, informed choice at the sign-up stage, and then to review that choice after browsing, a vast majority of people stuck to their initial choice.

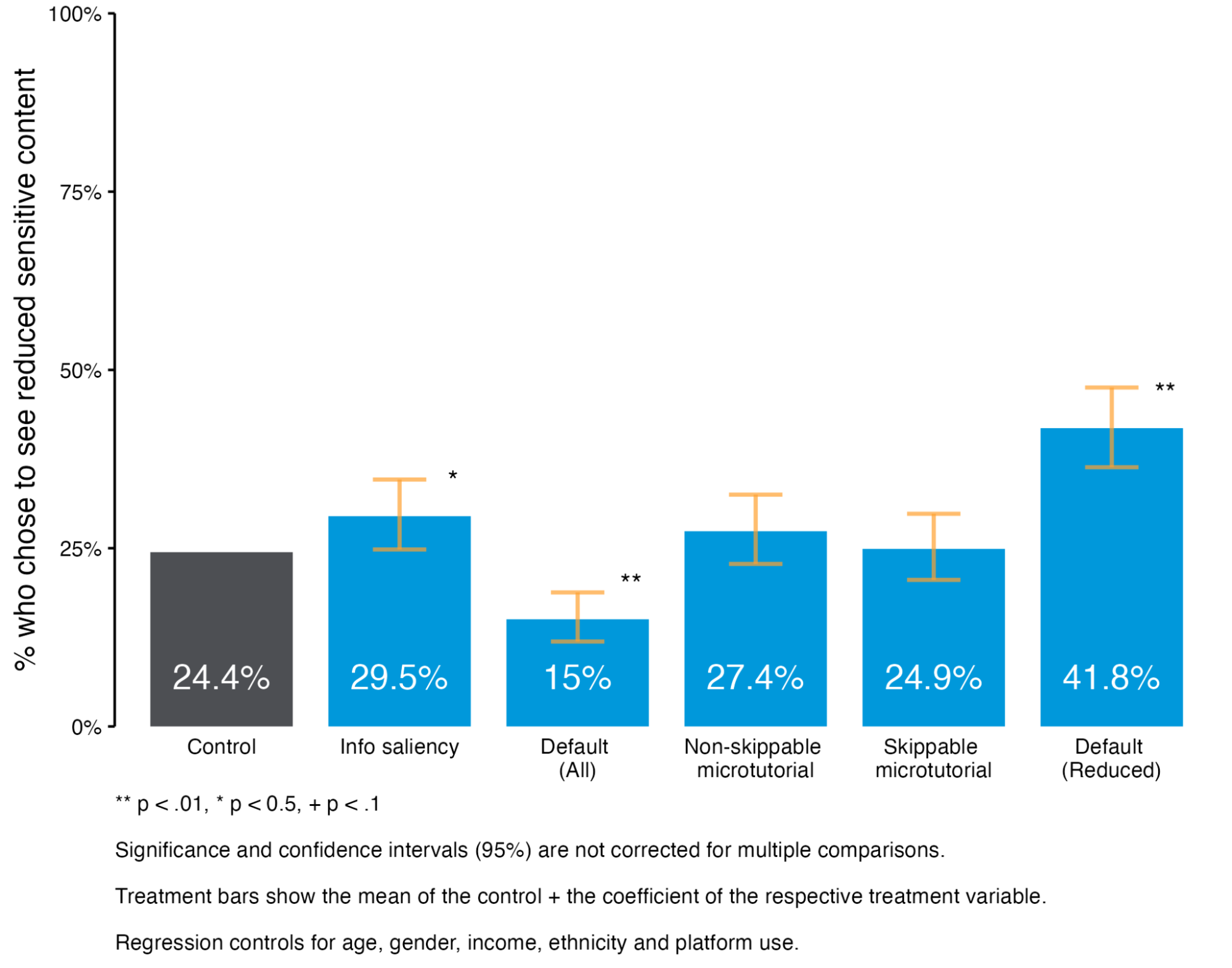

This is particularly interesting when we look at how people arrived at this initial choice. The choice architecture, or how the choice was presented to users, appears to have a significant influence. Pre-selection of an option had a pronounced effect. When ‘Reduced sensitive content’ was pre-selected 42% chose that option. But when ‘All content types’ was pre-selected, only 15% overrode the pre-selection and chose ‘Reduced sensitive content.’

And yet, when offered the chance to review their choice after browsing, only 1 in 10 choose to update their settings, regardless of the extent to which their initial choice was shaped by choice architecture. The initial choice is remarkably ‘sticky’ and there is little interest in making changes.

Check-and-update: Prompting to check their settings during browsing

Prompts that came after people had viewed sensitive content performed better than prompts that came at the beginning. Perhaps not surprisingly, exposure to potentially harmful content increases people’s appetite to engage with their content settings.

A prompt that emphasised how easy it was to update settings performed better than prompting users to take control of their feed. Both prompts were effective, but the message focussing on ease trumped the message designed to increase users’ sense of empowerment. For this to be effective, the underlying process would need to be made simple as well. Regular behavioural audits, which involve systematically reviewing online design practices and evaluating their impact on users, may be useful to understand how easy or difficult it is to change different settings

However, even the best-performing prompt (one which came after browsing and emphasised the ease of reviewing settings), only saw 23% of users checking their settings, similar to the 26% of surveyed users who said they check their content controls. The appetite to optimise content settings does not seem to be high.

Two key lessons from these trials

It’s important to remember that these experiments have limitations and don’t perfectly reflect real-world behaviour. Nevertheless, these results suggest two lessons. Platform choice architecture can have a substantial effect on user choices of content controls. But at the same time, content controls may be a blunt tool for helping users manage their online experience. Most users don’t seem to have strong preferences to get their settings right. People may prefer to deploy more ‘in the moment’ tools to shape their online experience, like skipping content or blocking accounts. It may be that people prefer these kinds of options to a binary choice of content setting that doesn’t allow them to fine-tune what content they see.The risk to that approach, of course, is that users are exposed to harmful content they’d rather avoid before they’ve had a chance to take evasive action. This will require us to promote media literacy by design and develop content controls that are adaptive and subtle enough to reflect the preferences of the users they aim to support.

*Following the completion of the trial, BIT ran an exploratory mini-experiment to investigate the effect of pre-selecting “Reduced sensitive content” on the initial choice page. This arm was run separately post-hoc and therefore is treated as exploratory