In our increasingly online world, new challenges and opportunities arise rapidly that affect billions of users – from scams to other online harms. The consequences are serious and the problems are growing. How can we address these issues at pace and shape online environments that maximize social good?

Leading technology companies know there is a strong and global responsibility to respond to the evolving threats and harms. Now is the time to supplement and bolster the views of boardrooms and CEO offices – and ask users directly.

By utilizing deliberative processes, we can introduce democratic decision-making to the online world, elevating the informed and collective voice of the public to shape how we referee digital life.

Our ongoing partnership with Meta has yielded strong evidence that deliberative processes are promising mechanisms for platform governance. We found that they are: feasible to run, create space for informed, high-quality discussions, and produce concrete and actionable recommendations.

Last October, building on earlier deliberative processes and our focus on AI, BIT designed and delivered a third Community Forum (CF) sponsored by Meta and working with Stanford University’s Deliberative Democracy Lab.

Meta CFs bring together diverse groups of people to discuss tough issues, consider hard choices, and share their perspectives on a set of recommendations that could improve the experiences people have across their apps and technologies every day.

This most recent CF focused on the principles guiding the responsible development of AI chatbots.

About the Community Forum on generative AI

To help ensure generative AI has a positive impact on our lives, the public should have a say in how it’s created. Otherwise, it risks causing harm when it should be improving outcomes.

The CF gave 1,500+ people across Brazil, Germany, Spain, and the United States this voice.

They discussed a series of proposals under two key themes:

- What principles should guide how chatbots offer guidance?

- What principles should guide how AI chatbots interact with people, and help them interact with others?

Our team analyzed a sample of these discussions to explore participants’ mental models, priorities, perceptions of the CF process, and more. We translated these insights into actionable recommendations for tech companies to incorporate informed public opinion into their responsible AI principles. Here’s a snapshot of what we found:

Building a common baseline

Some participants were more familiar with generative AI than others. Our team’s leadership in the science of human behavior enabled us to develop educational materials that created a shared knowledge baseline.

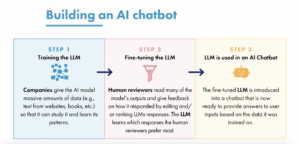

This excerpt from the participant education materials we created shows how one of the recommendations up for discussion could take shape.

This excerpt from the participant education materials we created shows how one of the recommendations up for discussion could take shape.

This excerpt from the participant education materials shares key information about how AI chatbots work so participants could understand the stakes of their deliberations.

With these and expert Q&A panels to answer their specific questions, participants engaged in a nuanced and reasoned conversation, drawing from real-life examples and exploring ethical arguments.

Navigating global differences

A key characteristic of the online space is its global nature—that was key to the issues participants deliberated over.

For example, when considering whether AI chatbots’ responses should prioritize local perspectives over “international” ones, some advocated prioritizing international sources for universal or widely accepted issues (e.g., respect for human rights), while still incorporating local perspectives for a more nuanced understanding. However, Brazil turned out to be an outlier, stressing that local perspectives were critical in the sources that generative AI uses.

“…It depends on the topic that was requested, but it would be able to take into account mainly information from international organizations, but adapted to the local level…” —Participant from Spain

Although many country groups viewed respect for human rights as a baseline, participants still grappled with the cultural relativity of different ideas, especially around what can be considered “widely accepted.” They acknowledged that this makes it challenging to impose universal standards for AI responses.

Although there was widespread agreement on a few themes, such as the need for responses to cite sources and that users should be informed when they’re interacting with an AI chatbot, as deliberations progressed, unique priorities emerged around the acceptable use of generative AI and the appropriateness of particular design features.

Participants in Spain and Brazil felt strongly against romantic relationships between humans and AI chatbots, US participants saw legality in the context of AI as an important design feature while people from Germany and Spain agreed that an ethical and universal code was critical for the safe deployment of AI.

Participants valued their experience in the Community Forum

In general, participants saw the CF as an effective process for increasing awareness of the ethical guidelines related to generative AI. They also noted the quality of both the deliberations and the experts as influences on their positive experiences.

“I’d never stopped much before to think about the topic. So it helped me to reflect a lot on an important topic and I’ve learned a lot…” —Participant from Brazil

Toward the end of the CF, participants advocated for tech companies to continue holding deliberative processes and promoting transparency.

Experimentation and evaluation are key to strong deliberative processes

Meta has previously collaborated with BIT on CFs about problematic climate content on Facebook and harassment in the Metaverse. We rigorously evaluated these deliberative processes to determine which elements made them engaging and user friendly. The results from this research were key to the success of this third CF on generative AI.

Recently, there has been a call for more behavioral experimentation within deliberative processes to ensure they are equitable and inclusive, and produce meaningful outcomes at scale.

BIT stands by this call to generate evidence, and hope that other organizations join us in this endeavor.

As applied behavioral researchers, we know that the design of a process has significant effects on the behaviors of participants. With our deep specialist insight and experience, we can help make deliberative processes such as these as effective as possible, and improve the outcomes that all stakeholders—users, organizations, regulators, and more—care about most.

If you’re interested in exploring how we can help you run effective deliberative processes, please contact us here.